High-Resolution Neural Face Swapping for Visual Effects

Swap the appearance of a target actor and a source actor while maintaining the target actor’s performance using deep neural network.

Overview

We implemented the neural face-swapping algorithm proposed in the paper High-Resolution Neural Face Swapping for Visual Effects, and made our own improvements to it.

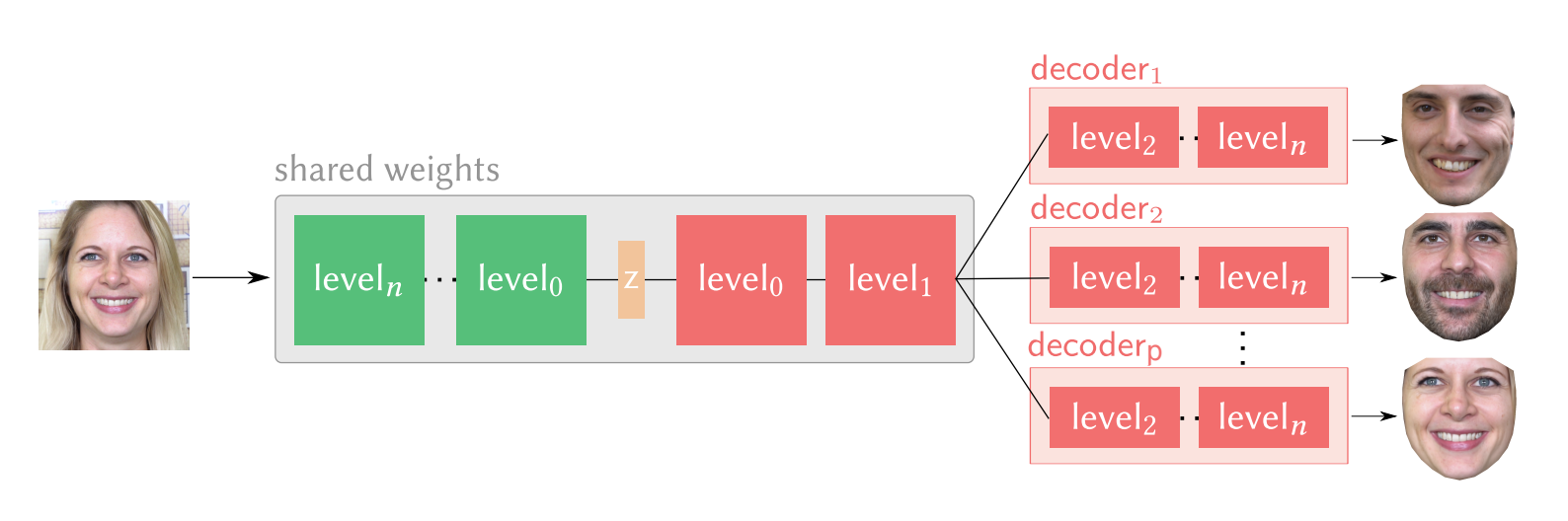

In brief, the network is a kind of Encoder-Decoder Model. The key idea of the work is the concept of a shared latent space. The encoder encodes an image to the latent space, while the decoders turn a piece of latent code to an image.

- During the training process, images from all identities are embedded in a shared latent space using a common encoder, and these embeddings are then mapped back into pixel space using the decoder corresponding to the desired source appearance.

- At testing time, we only need to replace the source identity’s decoder with the target’s, while keep the common encoder unchanged. All other steps are the same with training.

The paper’s algorithm takes the advantage of progressive training to enable generation of high-resolution images, and by extending the architecture and training data beyond two people, the network can achieve higher fidelity in generated expressions.

Our Works

We have put much effort into this work, and made many effective changes to the model after numerous experiments and analysis: (1) adding Batch-Norm Layers to some proper positions (2) redesign the Loss function and etc.

With our own improvements, (1) the network can have more stable performance when dealing with less-frequent expressions in the training sets (2) the generated results can reserve more detail information (e.g. wrinkles on the face, teeth exposed when laughing, and eye blinks).